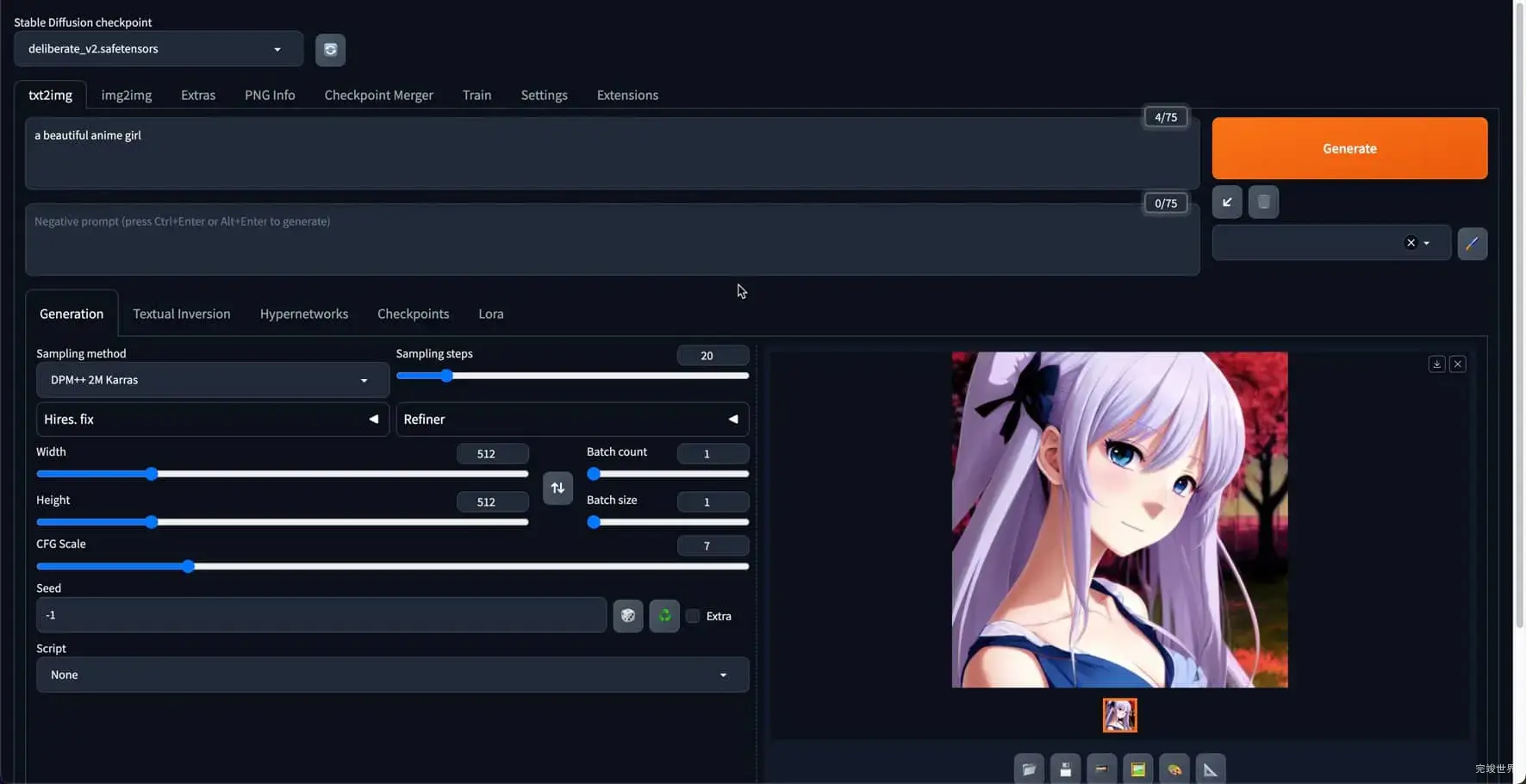

使用Stable Diffusion生成图片的时候报错了

creating model quickly: OSError

Traceback (most recent call last):

File "/usr/local/Cellar/python@3.10/3.10.13_1/Frameworks/Python.framework/Versions/3.10/lib/python3.10/threading.py", line 973, in _bootstrap

self._bootstrap_inner()

File "/usr/local/Cellar/python@3.10/3.10.13_1/Frameworks/Python.framework/Versions/3.10/lib/python3.10/threading.py", line 1016, in _bootstrap_inner

self.run()

File "/usr/local/Cellar/python@3.10/3.10.13_1/Frameworks/Python.framework/Versions/3.10/lib/python3.10/threading.py", line 953, in run

self._target(*self._args, **self._kwargs)

File "/Users/zhaowanjun/project/2023/ai/stable-diffusion-webui/modules/initialize.py", line 147, in load_model

shared.sd_model # noqa: B018

File "/Users/zhaowanjun/project/2023/ai/stable-diffusion-webui/modules/shared_items.py", line 110, in sd_model

return modules.sd_models.model_data.get_sd_model()

File "/Users/zhaowanjun/project/2023/ai/stable-diffusion-webui/modules/sd_models.py", line 499, in get_sd_model

load_model()

File "/Users/zhaowanjun/project/2023/ai/stable-diffusion-webui/modules/sd_models.py", line 602, in load_model

sd_model = instantiate_from_config(sd_config.model)

File "/Users/zhaowanjun/project/2023/ai/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/util.py", line 89, in instantiate_from_config

return get_obj_from_str(config["target"])(**config.get("params", dict()))

File "/Users/zhaowanjun/project/2023/ai/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/models/diffusion/ddpm.py", line 563, in __init__

self.instantiate_cond_stage(cond_stage_config)

File "/Users/zhaowanjun/project/2023/ai/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/models/diffusion/ddpm.py", line 630, in instantiate_cond_stage

model = instantiate_from_config(config)

File "/Users/zhaowanjun/project/2023/ai/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/util.py", line 89, in instantiate_from_config

return get_obj_from_str(config["target"])(**config.get("params", dict()))

File "/Users/zhaowanjun/project/2023/ai/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/modules/encoders/modules.py", line 103, in __init__

self.tokenizer = CLIPTokenizer.from_pretrained(version)

File "/Users/zhaowanjun/project/2023/ai/stable-diffusion-webui/venv/lib/python3.10/site-packages/transformers/tokenization_utils_base.py", line 1809, in from_pretrained

raise EnvironmentError(

OSError: Can't load tokenizer for 'openai/clip-vit-large-patch14'. If you were trying to load it from 'https://huggingface.co/models', make sure you don't have a local directory with the same name. Otherwise, make sure 'openai/clip-vit-large-patch14' is the correct path to a directory containing all relevant files for a CLIPTokenizer tokenizer.

Failed to create model quickly; will retry using slow method.

原因

clip-vit-large-patch14 国内已经不能访问了

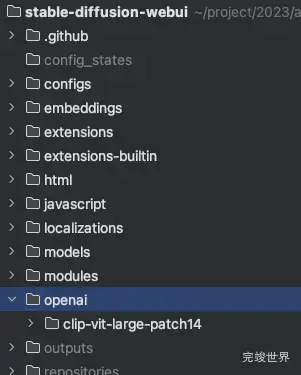

需要手动创建openai 目录并把 下载后解压的资源拖入到openai目录下面

原因

clip-vit-large-patch14 国内已经不能访问了

需要手动创建openai 目录并把 下载后解压的资源拖入到openai目录下面

下载链接

链接: https://pan.baidu.com/s/1wXhdDPbZsFxSLHCQK7uNew 提取码: pn2j